Home Lab: 1 year later

Last year, I wrote a series of blog posts covering the set-up of my home lab. The first post was on my decision to run Rancher’s k3s on my Raspberry Pis. Since then, I’ve made a few modifications to how its all managed. In this post, I discuss some of these changes I made over the last year.

From terraform to cloud-init

I used to use terraform to manage my raspberry pis.

While it accomplished the job, I felt it was overkill for what I needed it to do.

In this pass, I started by looking at the system-boot partition of an Ubuntu install.

It was in there, that I found ways to hook into things like Netplan and cloud-init.

This gave me a great step forward to codifying my home lab set-up with a declarative model.

https://github.com/mjpitz/rpi-cloud-init

No more pets

I used to name my clusters something cute, usually based off of a synonym.

Gone are the days of myriad, horde, hive, and hub.

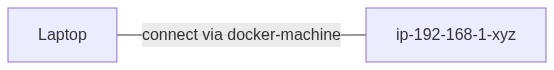

Now, I use hostnames that resemble the AWS convention.

That is, ip-192-168-1-xyz for the 192.168.1.0/24 range.

I still wrangle all of my machines using docker-machine.

It affords me a few conveniences.

One, I can easily connect to remote docker agents using docker-machine env.

This allows me to inspect containers without needing to jump to the box.

Should further inspection be needed, I can easily jump to the host using docker-machine ssh.

Home cloud

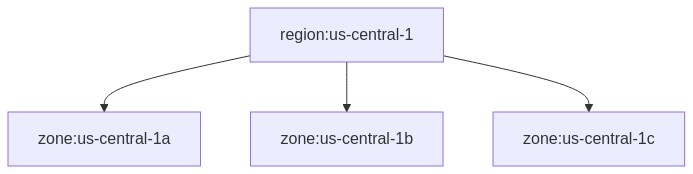

To make the most out of my set-up, I wanted to structure it based on a cloud. While I can’t have the exact set-up as a cloud provider, I can get pretty close. Each “availability zone” consists of:

- an independent power supply

- a single, raspberry pi 4 (control plane)

- four, raspberry pi 3b+ (worker)

Three of these “availability zones” compose a single “region”. Since I’m based out of us-central, I figured it would be a good starting point.

For simplicity, each zone is allocated a designated CIDR block.

us-central-1ais allocated192.168.1.50/29us-central-1bis allocated192.168.1.60/29us-central-1cis allocated192.168.1.70/29

Deploying k3s

Ever since switching to k3s, I haven’t needed any other tool. My deployment of it hasn’t changed too much. I continue to run the k3s agent through docker. One thing I do differently is pass in the appropriate topology flags.

docker run -d \

... \

--node-label "node.kubernetes.io/instance-type=rpi-4" \

--node-label "topology.kubernetes.io/region=us-central-1" \

--node-label "topology.kubernetes.io/zone=us-central-1a"From a development standpoint, there is a lot of benefit to having this information surfaced. You can test the spread of stateful workloads, enforce communication between services and geographical boundaries. One thing it affords me is the ability to test and leverage topology-aware service routing.

Leveraging GitOps

The last big change that I’ve made has been moving to a declarative deployment model for common elements. Instead of running my monitoring infrastructure outside of the cluster, I now run it inside of it. I do this through the use of a GitOps based deployment model. GitOps has become a popular way to perform declarative deployments using a Git repository as a source of truth.

To get started, I configured a repository with some common infrastructural elements. This repository is then synchronized with the cluster using tools like Flux. To simplify the manifests deployed to the cluster, I leverage the helm-operator. This allows me to easily deploy Helm charts into the cluster with the use of a single custom resource.